Benchmarking Analysis: Widescreen vs. Surround

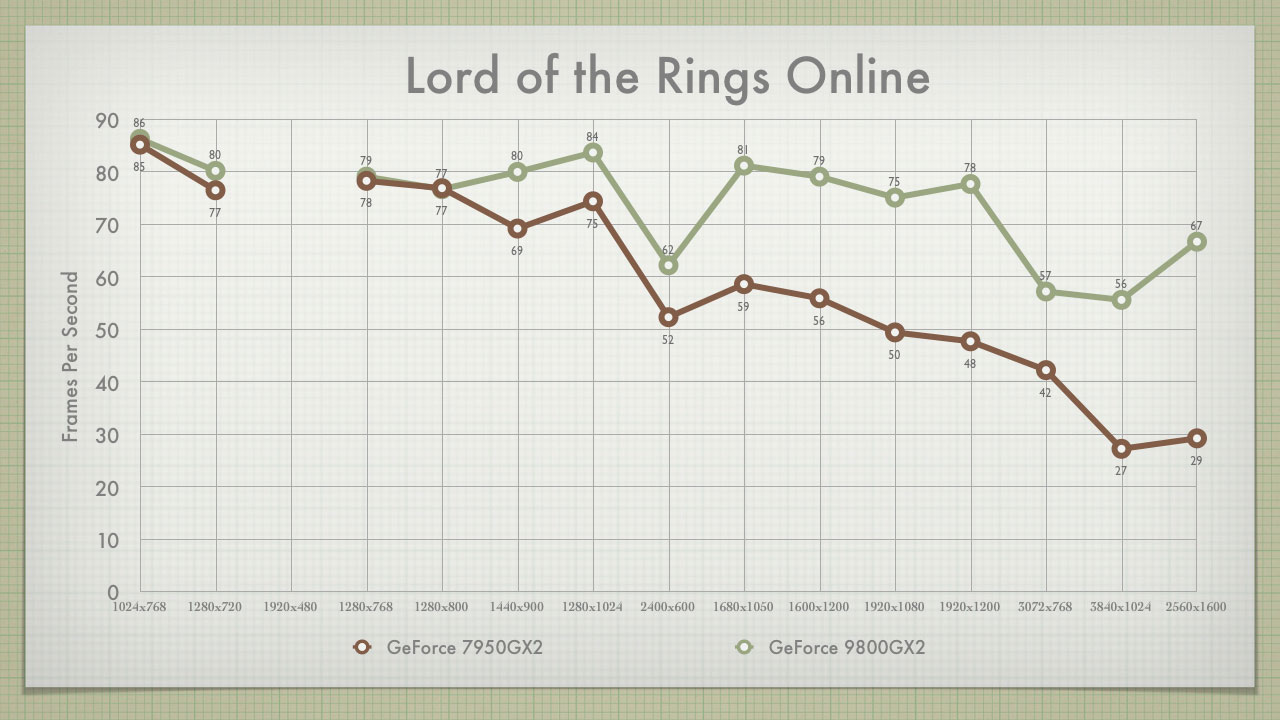

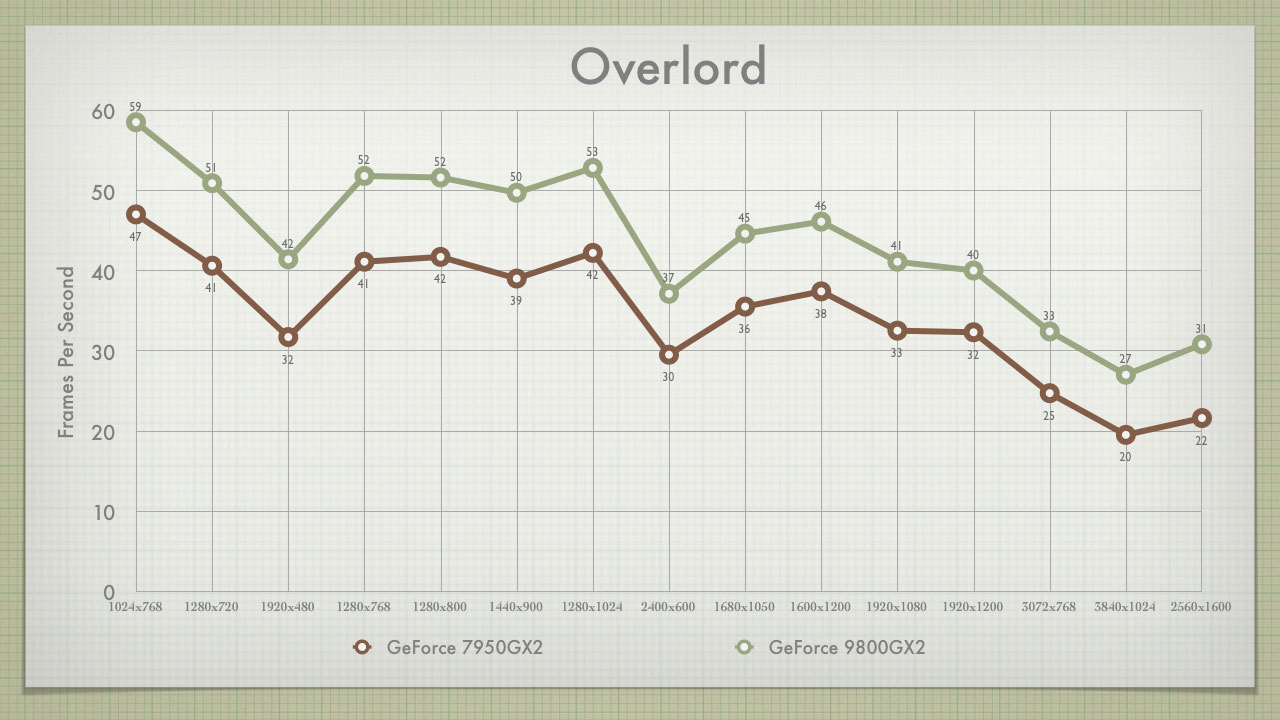

Pulling the data from my review of the NVIDIA GeForce 9800GX2, I set out to determine the impact that a Widescreen or Surround Gaming aspect ratio have on frame rates.

We all know that increasing resolution decreases FPS. But, what if that wasn't always the case? What if 1280x1024 performed better, due to its smaller FOV? What if Surround takes a major hit, even at low resolutions, due to its increased FOV? And if these are true (find out below), where does widescreen fall? I ran a series of benchmarks at twelve common resolutions for my review of the NVIDIA GeForce 9800GX2. Analyzing that data, I've been able to determine the impact of FOV on fps.

As of now, my rig stands at:

- GeForce 9800GX2 Reference Board from NVIDIA at 600MHz Core and 2000MHz Memory

- P5N32-SLI Premium/WiFi-AP

- Intel E6700 Core2Duo at 2x2.667GHz

- 4GB XMS2 Corsair RAM

- 2x Samsung 320GB T-Series HDD (one for the OS and games; one for swap file and FRAPS)

- LiteOn SATA Optical Drive

- Sound Blaster X-Fi XtremeGamer Fatal1ty Pro

- Enermax Infiniti 720W

- Lian-Li Black PC-777

- Dell 3007WFP

- Matrox Digital TripleHead2Go

- 3x HP LP1965

- Logitch G15 Keyboard & G5 Mouse

The Benchmarks

Seven games, in twelve resolutions, for each of two video cards. That's a lot of time, and a lot of frames.

The Effect of Aspect Ratio

We all know that the number of pixels effects fps (frames per second). But, how does the aspect ratio effect fps? Increasing the resolution adds detail to the on-screen objects, but increasing the aspect ratio (the FOV) adds objects to the game "window." Does FOV have an additional (or superseding) effect on fps, than does simple increases in resolution? To help answer this question, I benchmarked every game at every common resolution. I covered everything from 5:4 (the narrowest FOV), to 16:4 (3x4:3 Surround Gaming). The short answer is yes. Aspect Ratio has a major impact on fps; and it often has a greater (and superseding) impact over increased resolution.

Here is a table that outlines the Aspect Ratios covered in my testing:

| Aspect Ratio | 5:4 | 4:3 | 16:10 | 15:9 | 16:9 | 15:4 | 16:4 |

| Common Resolutions | 1280x1024 |

1024x768 1600x1200 |

1280x800 1680x1050 1920x1200 2560x1600 |

1280x768 |

1024x768 1920x1080 |

3840x1024 |

3072x768 |

| Field of View | 100 | 103.6 | 113.5 | 115.6 | 118.9 | 148.7 | 150.6 |

| Increase from 4:3 | -3.47% | --- | 9.56% | 11.6% | 14.8% | 43.5% | 45.4% |

Benchmarking Analysis: Widescreen vs. Surround - Details

921,600px & 768px Vert Resolutions

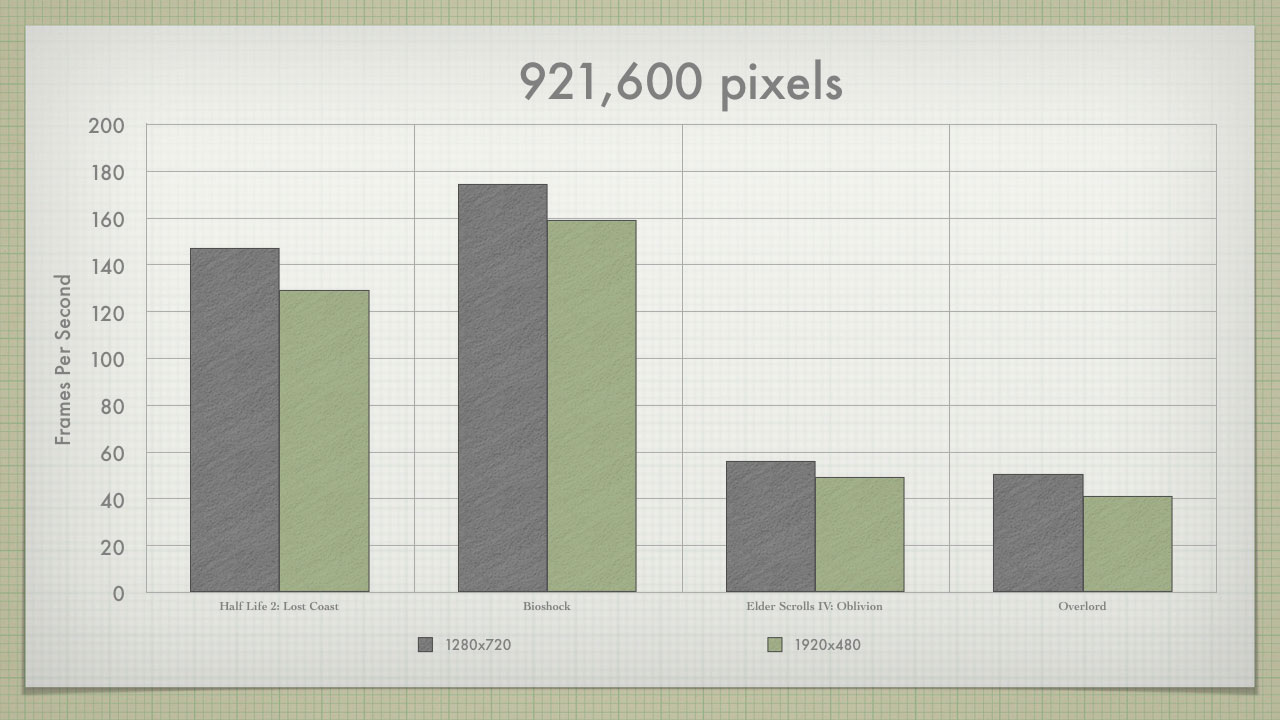

A funny thing happened on the way to benchmarking (reference), I realized that 1280x720 and 1920x480 both had the same number of pixels - 921,600. So, assuming that the GFX card is rendering the same number of dots, what is the impact in the increased number of on-screen objects?

The TH2G0 FOV is 26.6% wider than the 1280x720. Will TH2Go take a 26.6% hit on frame rate? Moving from widescreen to Surround resulted in the following drops in fps, even though it is the exact same number of pixels rendered

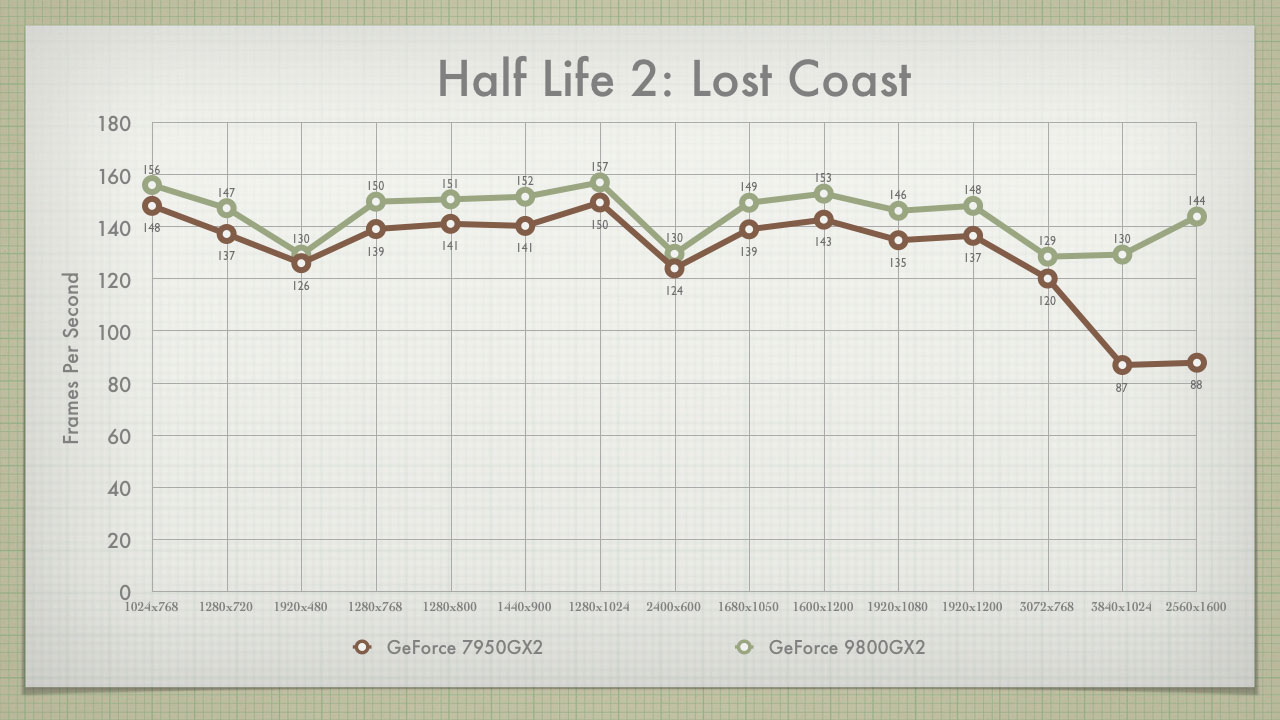

- Half-Life 2 - 18fps, 13.8%

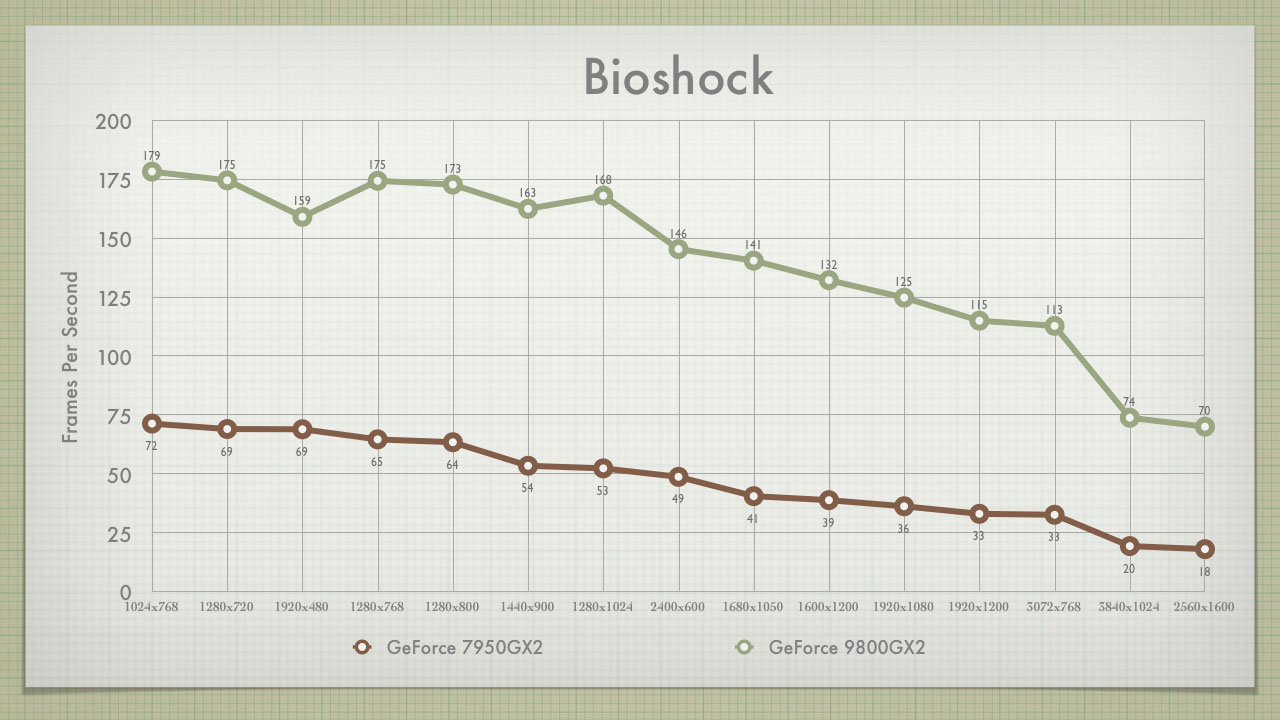

- Bioshock - 16fps, 9.7%

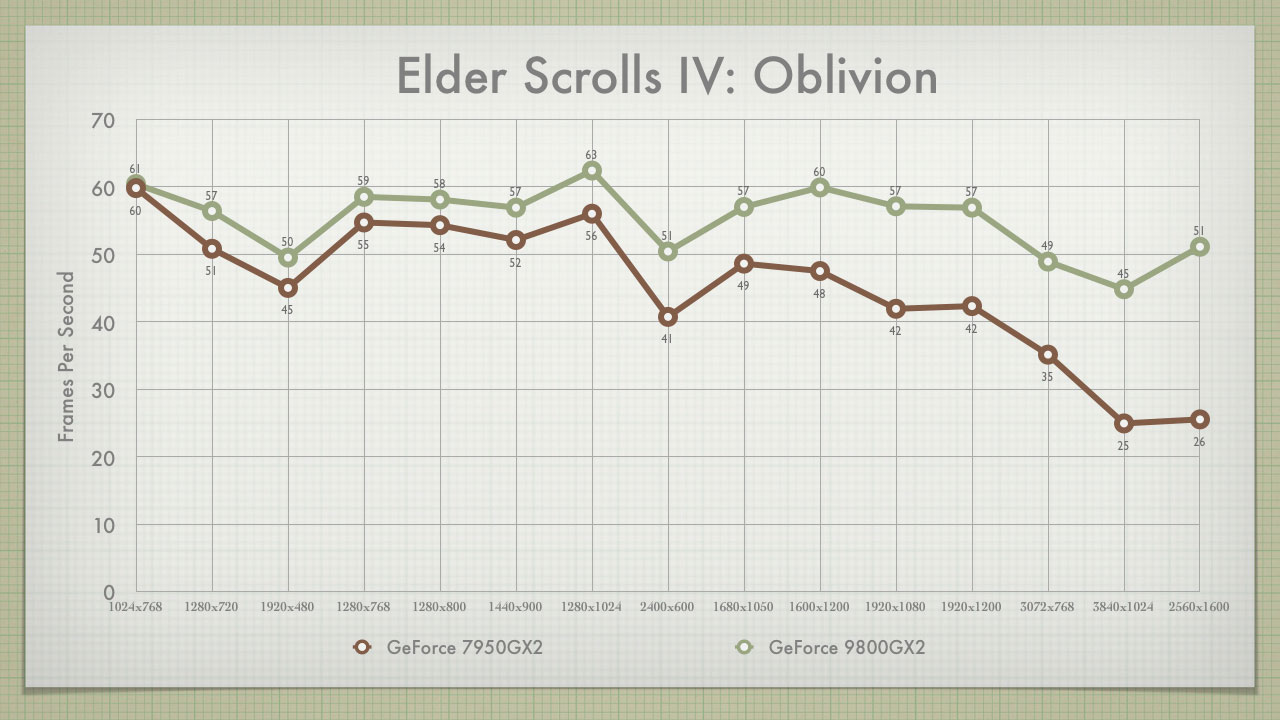

- Oblivion - 7fps, 13.9%

- Overlord - 22.9%

While not a 26% drop, moving to a Surround Gaming FOV does bring with it a significant performance hit. As an additional look, the below graph shows the performance impact when moving from 4:3, to widescreen, to Surround. It follows the performance impact of the increasing FOV, while maintaing a 768px vertical resolution

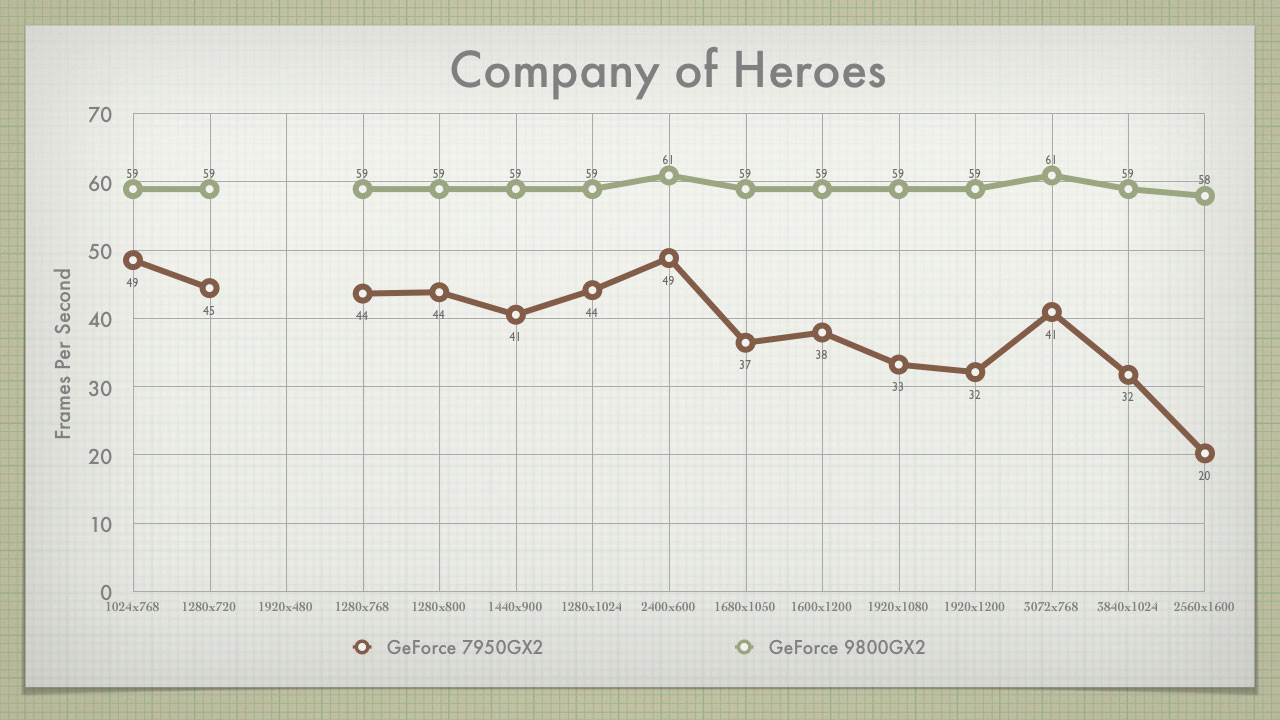

In general, the move from 1024x768 to 1280x768 provides a small performance hit. This resolution increase represents a 25% increase in pixels, and a 12.6% increase in FOV. The move from 1280x768 to 3072x768 provides a more pronounced performance hit (as would be expected), with the pixel count increasing by 140% and the FOV increasing by 30.2%.

XHD & Surround Gaming Resolutions

One of the most frequent questions asked at the WSGF goes something like this, "I'm thinking of getting a TripleHead2Go. Can my system handle it?" This is usually followed up with, "If I have to play at a lower resolution, how good is the scaling?" The sister set of questions involves upgrading to the high end of widescreen panels (1920x1200 and 2560x1600).

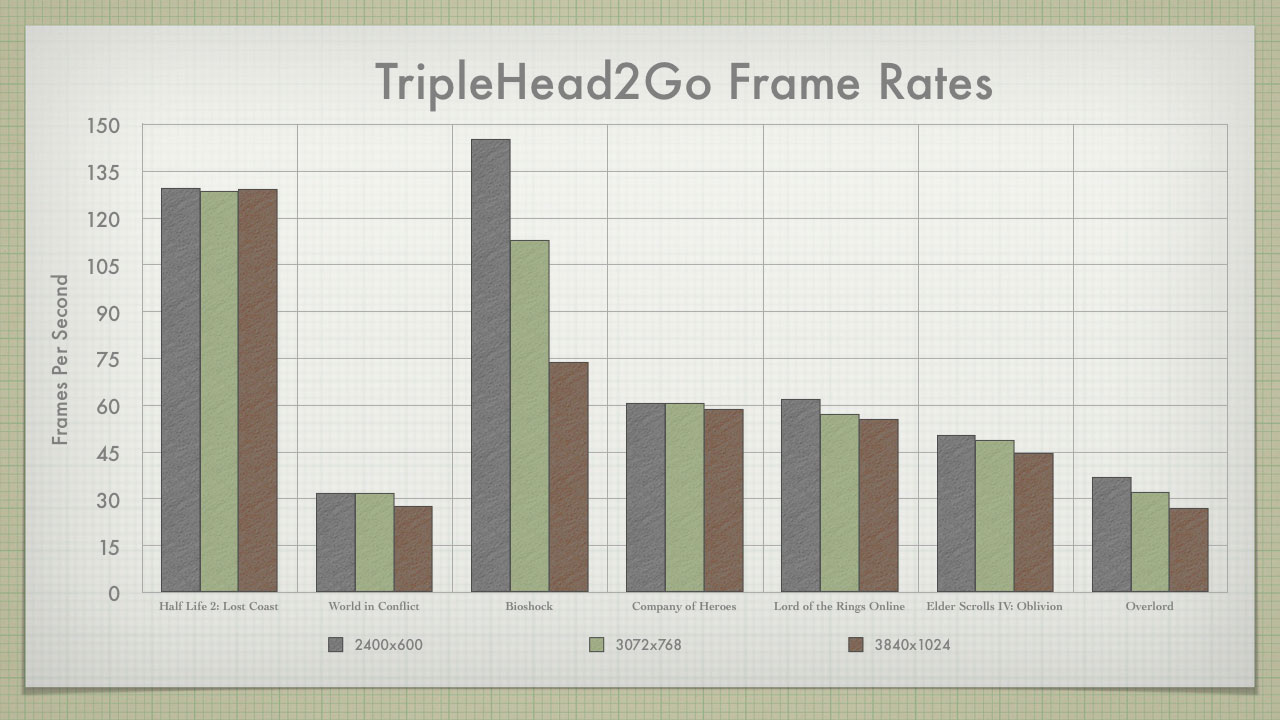

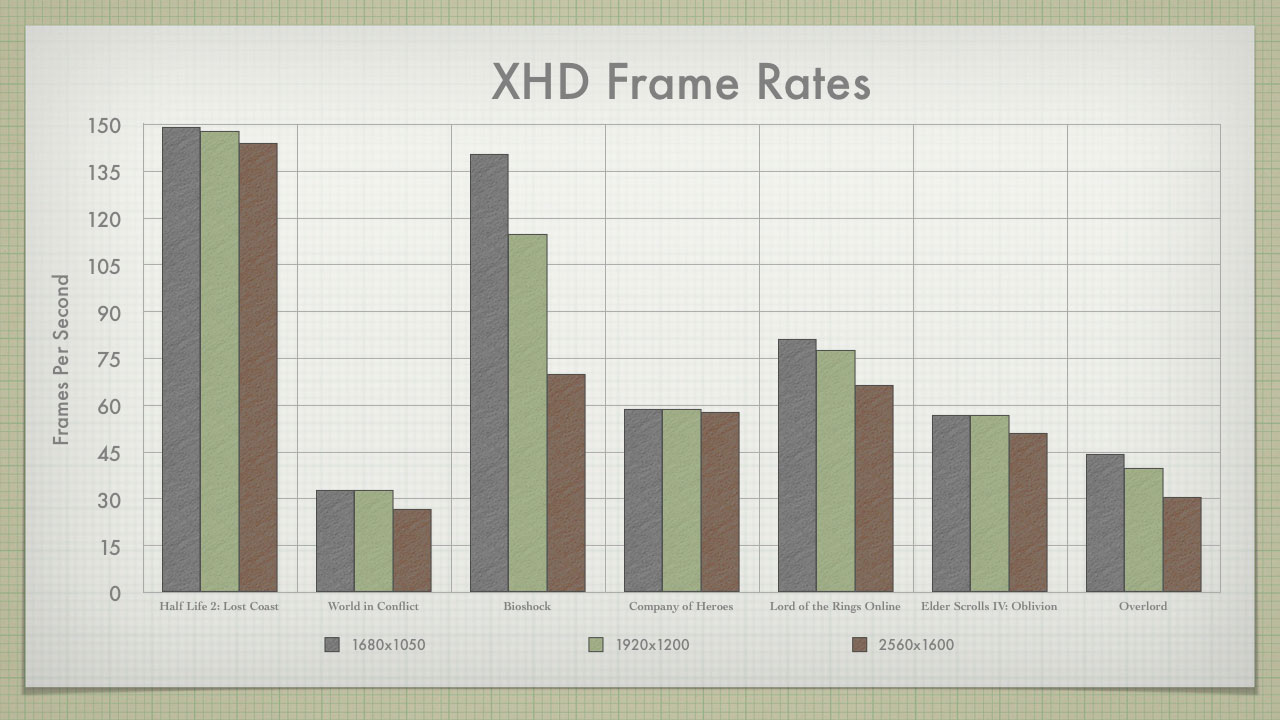

In an effort to help answer these questions, I set about comparing the relevant scores. For TripleHead2Go, I compared the top three resolutions - 2400x600, 3072x768 and 3840x1024. For widescreen, I compared the three resolutions in NVIDIA's XHD marketing initiative - 1680x1050, 1920x1200 and 2560x1600.

For TripleHead2Go, the increase in resolution had a minimal impact on the fps. The one exception to this rule is Bioshock; but even then, the game was still above 60fps at the top end. The DTH2Go does a great job of scaling, but with a 9800GX2 you shouldn't need to consider dropping your resolution. The 7950GX2 provided consistent results up though 3072x768, and it is nearly two years old.

Remember, all of these scores were obtained with all settings maxed out. Consider LOTRO as an example. The game ran almost 60fps at 3840x1024 with "Ultra High" settings; dropping to "Very High" kicked the average frames up to 90fps. There are plenty of opportunities to make small tweaks to the quality settings, before the need to consider a drop in resolution. The bottom line is that once you take the hit for the Surround Gaming aspect ratio, increasing the resolution has a minimal impact.

Benchmarks in the XHD resolutions told a similar story. The impact of moving from 1680x1050 to 1920x1200 was minimal, while the next move to 2560x1600 provided a bit more of an impact. And, as with the top end of the TH2Go, lowering the quality settings a bit can keep you from having to drop resolution.

Future games that will tax your hardware are always on the horizon, but that shouldn't stop you from making an investment in the best monitor and viewing experience possible. A GeForce 9800GX2 is enough to power what is out there today (well, except for Crysis) at the highest resolutions.

Benchmarking Analysis: Widescreen vs. Surround - Upgrading & Conclusions

Upgrading to Widescreen

This last section concerns the idea of upgrading from an existing 4:3 screen and moving into a widescreen. Will moving from a common 4:3 (or 5:4) panel, to a widescreen panel produce a noticeable hit to your framerates? In general, the answer is no (with the exception of Bioshock). Moving to a widescreen will result in a drop in fps, but the drop isn't enough to impact your gaming experience. The increase in FOV easily outweighs any impact to fps. One interesting finding is that even when dropping pixel count, a move to widescreen will reduce your fps. Much like we saw in the 921,600px comparison, aspect ratio and FOV almost always trump pixel count.

The first chart examines upgrading from a 17" (or possibly a 19") 4:3 panel. These panels carry a 4:3 physical aspect size, but their resolution of 1280x1024 is in fact a 5:4 resolution. The 5:4 aspect ratio is the narrowest available, so moving to a widescreen provides a larger FOV change than a move from a 4:3 panel. A 17" widescreen has a resolution of 1440x900, which is slightly fewer total pixels than the 1280x1024.

Even though the 9800GX2 was pushing about 1% fewer pixels at 1440x900, framerates dropped across the board. Half Life 2, World in Conflict and Bioshock all had their fps fall by about 3%. Company of Heroes fell by almost 5%, while Overlord fell by 6%. Topping the list was Oblivion, with a framerate hit of almost 10%.

A widescreen in the 20"-22" range carries a resolution of 1680x1050. This is almost 35% more pixels than the 1280x1024. With the 9800GX2, almost all the games tested posted similar numbers between the 1440x900 and 1680x1050. The two with noticeable differences were Bioshock and Overlord, but both were still very playable. In the end, if you're moving up from a 1280x1024 panel, you should go ahead and opt for a 1680x01050 panel. You will have similar performance with 1440x900, and a much larger viewing area.

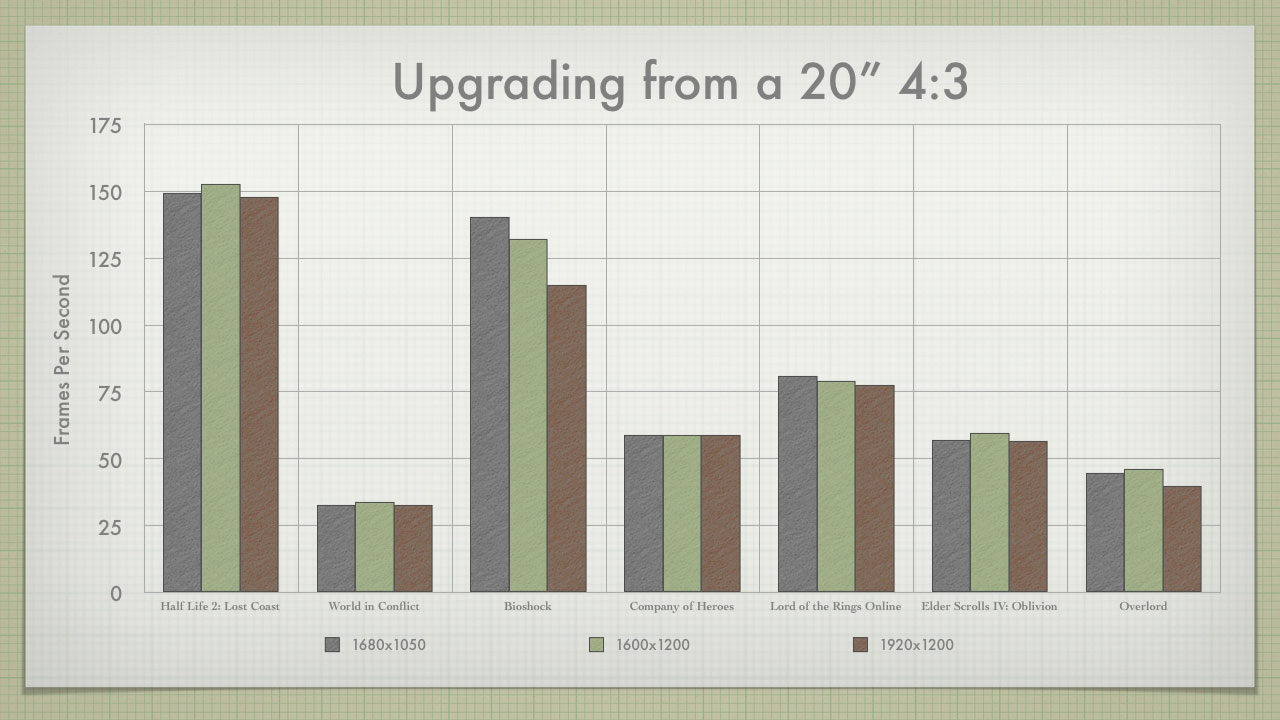

We see a similar story when looking to upgrade from a 20" flat panel (assuming a resolution of 1600x1200). Performance of the higher res panel is often on par with the lower res panels. As with the previous example, it makes sense to spring for the larger panel when upgrading from a 20" 4:3.

Conclusions on Aspect and FPS

Widescreen

Without a doubt, moving to a widescreen monitor causes a hit in framerate due to the larger FOV. Adding objects to the screen has a larger impact than simply adding more detail to existing objects. Almost all of the games tested here are Certified by the WSGF (Bioshock wasn't), and that means they are all Hor+. The one question left is: What is the impact of a widescreen FOV, when it is Vert-?

I had intended to settle the question around Vert- with Bioshock, as the game itself allows for Vert- or Hor+ gameplay. Based on its inconsistent performance in how it handled increasing aspect ratios (it didn't follow the pattern shown by other games tested here), I wasn't comfortable using it to draw conclusions. Based on all the work that went into benchmarking it, I went ahead and used it in this article. But, I am looking for games to test the impact of Vert-. With less objects on the screen, will the fps increase?

For all of the fanboys that call widescreen users cheaters with an unfair advantage, remind them that this "advantage" does come at the cost of fps - even at a lower resolution.

Surround Gaming

I love Surround Gaming. After gaming on a Digital TripleHead2Go, it's hard to go back to "normal" widescreen. The significantly increased FOV in Surround Gaming has a measurable impact on fps. But, once you cross that threshold, increasing the resolution often has little impact (with a capable video card, like the 9800GX2). This should come as consolation to those considering a TH2Go setup. If your rig can push the aspect ratio, it can push the high-end pixels. We often saw games running at the same fps across 1900x480, 2400x600 and 3072x768. Only 3840x1024 has any potential to run at a lower fps.

If you wanted to hedge your bets, you could set up a TH2Go rig with 3x17" screens, each at 1024x768. Personally, with the investment of a TH2Go setup, I'd simply make sure I had the proper video card setup to run at 3840x1024.

What's Next?

I plan to continue benchmarking new games on the 9800GX2. I will add new scores here, and to the article on Widescreen and Surround performance. I've finally been able to get Vista loaded. I had to make some minor configuration changes to my rig (including going from 4GB to 2GB of RAM). I did all of this after this review, so I'll have to start over in some DX9 vs. DX10 benchmarks.

I am also going to research on how Hor+ and Vert- games compare when upgrading from 4:3 to Widescreen. The data here showed that Widescreen took a hit to performance, and the assumption is that the hit is due to the increase in number of on-screen objects. But, what happens when you decrease the FOV and remove objects?